How to Avoid Zero-spacing in Fractionally-Strided Convolution? A Hardware-Algorithm Co-design Methodology

A Multi-Channel-Multi-Kernel KN2ROW Approach for FSC

A Multi-Channel-Multi-Kernel KN2ROW Approach for FSCAbstract

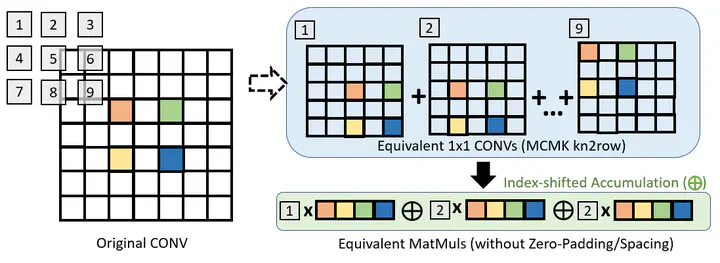

Fractionally Strided Convolution (FSC) is a key operation in popular image-based Deep Learning models, for example, CNN back propagation, the decoding stage of convolutional auto-encoders and generative CNNs (GAN), etc. FSC typically performs up-convolution on a 2-D grid image, i.e., expands it to a larger one, as compared to conventional (down)-convolution, resulting in more complex computation patterns. Specifically, it introduces additional interleaved zero-spacing (i.e. insertion and padding of zeros) in feature maps that impose excessive computation and memory access overheads on traditional convolution methods such as im2col. The resulting hardware under-utilization is especially severe in layers with large kernels and large strides, commonly seen in typical CNNs and Generative CNNs. In this paper, we propose a methodology to address this challenge using a multi-channel-multi-kernel parallel algorithm, kn2row, to eliminate zero-computations in FSC. We further develop a unified accelerator for kn2row-based convolution and FSC operations in High-Level Synthesis (HLS). Benefiting from the compute-reduction of kn2row, we achieve up to 14.6x improvement in effective resource utilization in typical convolutional auto-decoding layers, GAN layers and backward pass of Nature-CNN, a reinforcement learning bench-marking model.